Do search engines and other content distribution platforms have a responsibility to present information that is true? Columnist Janet Driscoll Miller discusses the issues surrounding this question.

Since the election, there’s been a lot of discussion about fake news and its ability to sway masses into potentially false perceptions. Clearly, creating false perceptions in mass media is a dangerous thing, and it can sway public opinion and policy greatly.

But what about search engines and other content distributors? Even before the US election, German Chancellor Angela Merkel warned that search engine algorithms, “when they are not transparent, can lead to a distortion of our perception, they can shrink our expanse of information.” What responsibility, then, does a search engine have to produce truthful information?

Is Pluto a planet?

Getting at truth can be tough because not everything is black and white, especially in certain subjects. Take, for example, good old Pluto. Many of us grew up learning that Pluto is a planet. Then, in 2006, astronomers ruled that it was no longer a planet.

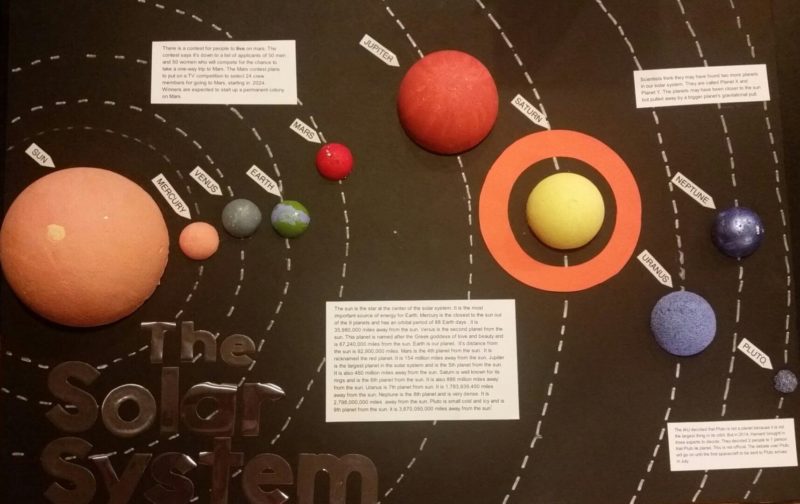

But in the last few years, Pluto’s planetary designation seems to have been in dispute. As I was helping my daughter with her solar system project for school, I questioned if we should add Pluto as a planet or if it should be left off. What is Pluto’s planetary status now?

Unfortunately, the answer still wasn’t clear. The International Astronomical Union (IAU) determined that Pluto is not a planet because it only meets two of their three criteria for planetary status:

- Orbit around the sun (true).

- Be spherical (true).

- Be the biggest thing in its orbit (not true).

In fall 2014, the Harvard-Smithsonian Center for Astrophysics held a panel discussion on Pluto’s planetary status with several leading experts: Dr. Owen Gingerich, chair the IAU planet definition committee; Dr. Gareth Williams, associate director of the Minor Planet Center; and Dr. Dimitar Sasselov, director of the Harvard Origins of Life Initiative. Interestingly, even Gingerich, who is the chair of the IAU planet definition committee, argued that “a planet is a culturally defined word that changes over time,” and that Pluto is a planet. Two of the three members of the panel, including Gingerich, concluded that Pluto is indeed a planet.

Confused yet? Sometimes there are multiple reputable organizations who debate two potential truths. And that’s OK. Science is about always learning and discovering, and new discoveries may mean that we have to rethink what we once considered fact.

A problem bigger than the smallest planet

While my Pluto example is a fairly harmless and hopefully less controversial example, there are clearly topics in science and beyond that can create dangerous thinking and action based on little or no proven fact.

The issue, especially in science and research, delves much deeper, though. Even if a research study is performed and demonstrates a result, how dependable is that result? Was the methodology and sample size proper? All too often, we see sensational clickbait headlines for studies, as John Oliver shared earlier this year:

Hey, who doesn’t want to drink wine instead of going to the gym?

But the methodology around some of these research reports can be truly suspect. Sweeping generalizations, especially around the health of humans and the environment, can be incredibly dangerous.

In the video segment, Oliver shares a story published by Time magazine, which I would normally consider a reputable source. The article is about a study which, Time claims, suggests that “smelling farts can prevent cancer.” Now, while this particular study actually did not actually make that claim, if you search for “smelling farts can prevent cancer” on Google, here are the results:

Google has even elevated the false information to a Google Answer at the top of the page. In fact, the first result disputing this false claim doesn’t even appear above the scroll line.

The media and clickbait

As Oliver points out in the video, the problem is larger than just users sharing and buying into this information. Rather, there’s a deeper issue at play here, and it centers around what’s popular. Most of us are familiar with clickbait — outrageous headlines created to entice us to click on an article. In an effort to compete to get the most clicks (and thus ad revenue), media outlets have resorted to trying to share the most outrageous news first.

The problem for Google is that much of their algorithm relies on the authority of a site and inbound links to that website. So if a typically authoritative site, such as CNN, posts stories that are not fact-checked, and then we share those links, those two actions are helping to boost the SEO for the incorrect information.

But isn’t it much more fun to think that drinking wine will spare me from having to go to the gym? That’s essentially why we share it.

Why fact checking is hard, manual work

If media outlets and websites aren’t fact checking, how can Google do this? There are certainly a number of sites dedicated to fact checking and rumor validation, such as Snopes and PolitiFact, but they also rely on human editors to pore over articles and fact-check claims.

Last year, Google engineers outlined in a research paper how they might incorporate a truthfulness measurement into the ranking algorithm. But can that really be done? Can a simple algorithm separate truth from fiction?

There are many fact-checking organizations, and there’s even an international network of fact-checkers. But ironically, while there are some mutually agreed-upon best practices, there are no set standards for fact-checking — it can vary by organization. Further, to Oliver’s points in the video, fact-checking different topics requires different standards. A scientific study, for instance, may need to be judged on several standards: methodology, duplication of study and so on, whereas political articles likely require on-the-record quotes to verify.

Treating the cause

Google and Facebook both have started taking steps to eradicate fake news. Google announced it would no longer allow sites with fake news to publish ads on those pages, essentially seeking to cut off potential revenue streams for fake news producers that rely on false clickbait to generate income. That’s certainly one cause of false news generation, but is it the only one?

The issue is much deeper than just ad revenue. One of the scientists in Oliver’s video shares how scientists are incentivized to publish research. The competition in journalism and in science to get “eye-catching” results is real. So the root cause can often be more than just ad revenue — it may be just to get noticed. Or further, it may be to promote an agenda, which falls under the umbrella of propaganda.

The other side of the situation: bias and backlash

So what should Google do? The challenge the search engines (and Facebook) are confronted with is looking biased or being accused of promoting and favoring one side over another. Per Merkel’s comments, this stifles debate as well. And as Google has seen numerous times, and Facebook recently saw this summer with the accusation that its news feed was liberal-leaning, showing more or less of one side of a story may earn the platform a reputation of being biased.

Further, as we’ve established, truth is not always black and white. Merriam-Webster defines truth as “a statement or idea that is true or accepted as true.” So what if I accept something as true that you do not? Truth is not universal in all cases. For example, an atheist believes that God does not exist. This is truth for the atheist.

As one writer commented in an article on Slate.com, “No one — not even Google — wants Google to step in and settle hash that scientists themselves can’t.” Is it really Google’s responsibility to promote only content it deems through an algorithm to be true?

It certainly puts Google in a tough bind. If they quell sites that they believe are not truthful, they may be accused of censorship. If they don’t, they have the power to potentially sway the beliefs of many people who will believe that what they see in Google is true.

Another answer: education

We’ll never stop clickbait. And we’ll never stop fake news. There’s always a way to work the system. Isn’t that what SEOs do? We figure out how to respond to the algorithm and what it wants to rank our sites higher in results. While Google can take steps to try to combat fake news, it will never stop it fully. But should Google stop it completely? That’s a slippery slope.

In conjunction with these efforts, we really have to hold journalism to a higher standard. It starts there. If it sounds too good to be true, you can bet it is. It starts with questioning what we read instead of simply sharing it because it sounds good.

Time for my daily workout: a glass of wine.

To know more latest update or tips about Search Engine Optimization (SEO), Search Engine Marketing (SEM) - Fill ContactUs Form or call at +44 2032892236 or Email us at - adviser.illusiongroups@gmail.com

No comments:

Post a Comment